A new approach to high-speed photography could help capture the clearest-ever footage of light pulses, explosions or neurons firing in the brain, according to a team of ultrafast camera developers. The technique involves shooting 100 billion frames per second in a single exposure without an external light source. That means, for example, there would be no need to set off multiple explosions just to gather enough data to create a video reconstructing exactly how chemicals react to create the blast.

A team of Washington University in Saint Louis researchers introduced their “single-shot compressed ultrafast photography” camera two years ago. Last week they published a study in Optica describing improvements to their original camera that allow it to reconstruct images with finer spatial resolution, higher contrast and a cleaner background—qualities crucial to detailed observations of high-speed events. The camera is three billion times faster than one on a typical iPhone, says Lihong Wang, a professor of biomedical engineering at Washington University and a co-author of the study.

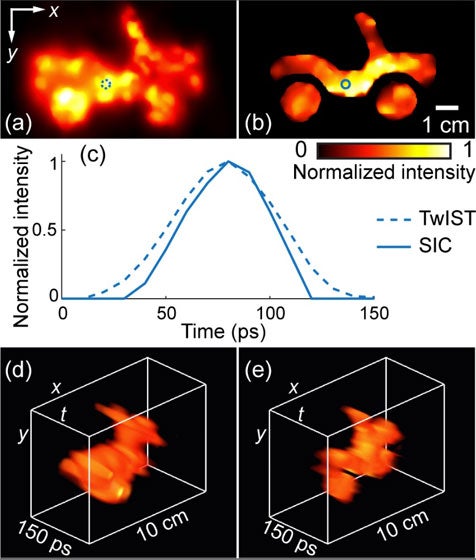

The sharper images come from adding a second integrated circuit—a type of sensor called a charge-coupled device, or CCD—and an enhanced data reconstruction algorithm to the team’s original setup. The algorithm gathers data from both CCDs to deliver higher-quality images. The researchers demonstrated the upgrades by making a movie of a picosecond laser pulse traveling through the air. (One picosecond is equal to one trillionth of a second.)

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

One area where such a camera could someday prove useful is in capturing information about how the brain’s neural networks operate—not just how they are connected—Wang says. He uses the following analogy: If the neural network is represented as city streets, current imaging technology enables scientists to see only the layout of those streets. New technologies are needed to see the traffic coursing through the streets and understand how the whole system functions. Wang hopes his work will ultimately be useful to the White House BRAIN Initiative, a project launched in 2013 that seeks to better understand brain function through the development and use of new technologies.

Another advantage of the new ultrafast technique is that it does not need a laser or other external light source. “If you require external illumination then you have to sync it with the camera,” Wang says. “In some cases you don’t want to or can’t do this—you want to image the native emission of some object such as an explosion, for example.” When people study events like that now, they use a “pump-probe” method, which requires them to repeat the event many times and piece together the data into a single video. “Our camera can be used for real-time imaging of a single event, capturing it all in one shot at extremely high speeds,” Wang adds.

The single-shot compressed ultrafast photography camera is useful for imaging brightly fluorescent objects but does not currently have the sensitivity needed to capture detailed images of neurons, says Keisuke Goda, a University of Tokyo physical chemistry professor and part of a group of researchers who in 2014 built a “sequentially timed all-optical mapping photography” camera that can snap pictures at 4.4 trillion frames per second. But unlike the device Wang and his colleagues developed, the Japanese camera requires a light flash—albeit one lasting just a femtosecond, or one-quadrillionth of a second—to illuminate its subject.

Goda, who was not involved in the team’s research, says the Washington University camera also lacks the speed needed to take clear pictures of chemical reactions that take place on the order of femtoseconds. Wang counters that the speed is more than enough and that the sensitivity was theoretically estimated to be sufficient, although it has not been tested yet. “We are seeking funding to conduct [that] experiment,” Wang says.

Given the amount of money the government is throwing at its BRAIN project—$85 million in fiscal 2015 alone—Wang and his team might not have to wait too long.

Using the improved version of their compressed ultrafast photography, or CUP, camera (right), the researchers captured a high quality movie showing laser light reaching different portions of a printout of a toy car at different times. The left image shows the image quality they achievable prior to the CUP upgrades. Courtesy of Liren Zhu, Jinyang Liang and Lihong V. Wang, Washington University in St. Louis