Three years ago HPE (formerly Hewlett–Packard) laid out its vision for a radical redesign of the computer that would deliver hitherto unimaginable performance in a single system. By combining all the memory that is usually tied in small chunks to each processor into one vast pool and connecting everything with fiber optics instead of copper wires, HPE sought to turbo-charge computers in ways that packing ever-more transistors on microchips would never achieve. HPE called this next-generation computer simply “The Machine.” Since then, it has set about turning these mythical components into reality.

Fast-forward to today and, although The Machine still has not arrived in all of its glory, HPE is providing a peek at progress so far. The prototype now on display at The Atlantic’s On the Launchpad: Return to Deep Space conference in Washington, D.C., features 1,280 high-performance microprocessor cores—each of which reads and executes program instructions in unison with the others—with access to a whopping 160 terabytes (TB), or 160 trillion bytes, of memory. By comparison, today’s high-end gaming PCs have about 64 gigabytes (64 billion bytes) of memory. Optical fibers, which use photons rather than electrons, pass information among the different components.

“The big message is they have a fully functional large-memory research system up and running,” says Paul Teich, principal analyst with TIRIAS Research, a high-tech research and advisory firm. “HPE's The Machine is defined by its single, huge pool of addressable memory.” A computer assigns an address to the location of each byte of data stored in its memory. The Machine’s processors can access and communicate with those addresses much the way high-performance computer nodes—the kind that model weather forecasting or run simulations used to discover new chemical compounds—work together. The main difference is high-performance computer nodes are spread out over large data centers and require many network connections, protocols and data transfers to share memory, Teich says, adding that some of those connections might use kilometer-long network cables within the same data center. “The Machine is intended to span these distances using direct memory access across very high speed optical cables.”

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

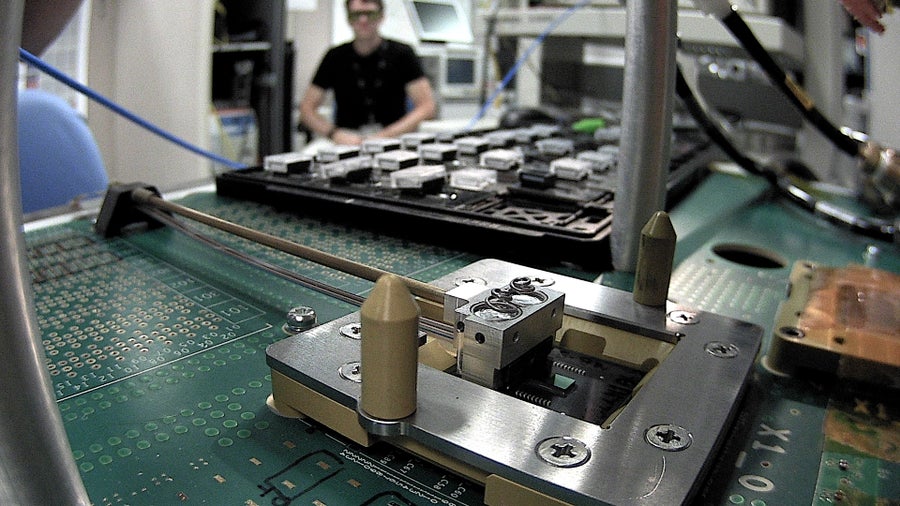

HPE’s X1 photonics interconnect module on the ‘Kraken’ test stand at Hewlett Packard’s Silicon Design Labs in Fort Collins, Colo. Microscale laser technology replaces traditional copper wires with light to speed data transfer between electronic devices. Credit: Hewlett Packard Enterprise

Missing from the setup are the much-coveted memristors, which are still a work in progress. Instead The Machine’s 160 TB memory comes in the form of dynamic random access memory (DRAM), which stores each bit of data in a separate capacitor within an integrated circuit but loses that data when the power is switched off. Unlike DRAM that must access data from disk storage each time a PC is fired up, memristors will hypothetically be able to pick up where they left off when power is restored. Flash and static RAM have similar “nonvolatile” properties that allow them to retain data but are more expensive and hold less information than DRAM. The main problem with memristors is no one has figured out how to make large numbers of them that are reliable enough for commercial electronic devices. Researchers continue to puzzle over the best materials to use and the most effective way of manufacturing them.

HPE chose DRAM for this prototype because it allowed the company to include the large amount of memory needed to test the new system’s memory controllers, photonic interconnects and microprocessors, says Kirk Bresniker, Hewlett–Packard Labs chief architect and an HPE Fellow. Capable of working with five times the amount of information stored in the Library of Congress, the system features “the world’s largest memory array and is running a breakthrough application requiring sophisticated mathematics that allows this architecture to shine.”

The program on demo at The Atlantic event in D.C. was written to detect security threats within a simulated corporate network. “If you look at the relationships between devices accessing certain areas of a network, you can discern—if you look closely at all of the access attempts—which are done by normal employees and which are unusual because a system has been compromised by malware,” Bresniker says. “The challenge is that such threats show themselves only through very subtle and anomalous access patterns camouflaged within the billions of normal system access requests that transit a corporate network every day.” The Machine prototype’s ability to load all of that data into its memory array allows it to connect relationships between the data and identify the security threats, he says. “Partitioning the analysis of this data across multiple networked devices would be too slow to find those subtle correlations in the data.”

HPE’s decision to demonstrate graph analytics is significant because that type of analysis is used to perform the kinds of functions that, for example, enable social media networks to make recommendations based on user preferences and behavior, Teich says. A system the scale of The Machine has the capacity to keep massive data sets—such as all the relationships among people using Facebook or Twitter—in memory and analyze them in real time, he adds.

Close-up of an electronic circuit board in HPE’s Memory-Driven Computing prototype in Fort Collins, Colo. The new computer architecture collapses memory and storage into a single vast pool of memory to increase processing speed and improve energy efficiency. Credit: Hewlett Packard Enterprise

HPE will develop The Machine—the largest research and development program in the company’s long history—in three stages, of which today’s unveiling is the first. In the second and third phasesthe company plans to move beyond DRAM to test phase-change random access memory (PRAM) and memristors, respectively, over the next few years. PRAM uses electrical current to store data in a glassy substance called chalcogenide, whose atoms rearrange when heated. Chalcogenide is also used in CDs and DVDs, which store information with the help of a laser. Rival IBM is taking a somewhat different tack in its efforts to develop next-generation computing, focusing on neuromorphic systems that mimic the human brain’s structure as well as quantum computers that leverage the quantum states of subatomic particles to store information.

Bresniker says HPE’s exploration of memristors and next-generation computing architectures is driven by the exponential growth of data as Moore’s law stops scaling. The end of Moore’s law, coined by Intel co-founder Gordon Moore in 1965, means chipmakers will no longer be able to shrink transistors small enough to continue to the trend of doubling how many they can fit on their integrated circuits every 12 or 18 months. “So many of the interesting problems we’re looking at—intelligent power grids and smart cities that feed into the Internet of Things—will involve such large volumes of data,” he says. Much of it will never even see a data center because it will live on intelligent devices and sensors at the edges of the network. “The ability to work with all of this data has to match the demand, which does not seem to have any capping function yet,” he says. “Our ability to generate data as a species is an exponential curve that does not seem to have an upper limit.”