“Before we work on artificial intelligence, why don’t we do something about natural stupidity?” computer scientist Steve Polyak once joked. The latter might be a tall order. But AI, it appears, just took one small step for robotkind.

New research published June 14 in Science reports that for the first time scientists have developed a machine-learning system that can observe a particular scene from multiple angles and predict what it will look like from a new, never-before-observed angle. With further development the technology could lead to more autonomous robots in industrial and manufacturing settings.

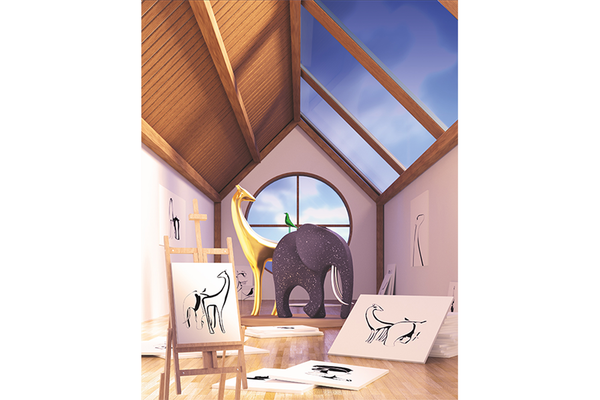

Much in the way we can scan a friend’s apartment from one side of the living room and have a pretty good sense of what it looks like from the other, the new technology can do just that for a scene within a three-dimensional computer image.

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Devised by researchers at artificial intelligence company DeepMind—acquired by Google in 2014—the new system can “learn” the three-dimensional layout of a space without any human supervision. This Generative Query Network, or GQN—as its developers call it, is first trained by observing simple computer-generated scenes containing different lighting and object arrangements. It can then be exposed to multiple images of a new environment and accurately predict what it looks like from any angle within it. Unlike the hyperconnected perceptual regions of the human brain, the system learns and processes properties like shape, size and color separately and then assimilates the data into a cohesive “view” of a space. “Humans and other animals have a rich understanding of the visual world in terms of objects, geometry, lighting, etcetera,” says Ali Eslami, lead author on the new paper and a research scientist at DeepMind. “This capability is developed through a combination of innate knowledge and unsupervised learning. Our motivation behind this research is to understand how we can build computer systems that learn to interpret the visual world in a similar manner.”

Machine learning as a field has barreled forward in recent years. And GQN technology builds on many past systems, including the numerous “deep-learning” models based on neural networks inspired by the human brain. Deep learning is a form of machine learning in which a computer “learns” from exposure to an image or other data to, say, detect the various features that make an object a cat or a spoon. It does so after observing many images of scenes labeled to identify these objects. GQN utilizes deep learning to build a form of computerized “vision” that enables navigation through complex scenes. What’s unique about it compared with many other systems is its ability to learn on its own purely from observation and without human supervision. It analyzes unlabeled objects and the space in which they fit in a scene and then apply its learnings to another image. “This gives GQN increased flexibility and frees us from having to create a large collection of models for every object in the world,” Eslami says. In other words it can recognize a novel object based on prior exposure to a different object using characteristics like shape and color.

For now the new system has only been designed to work with computer-generated scenes, not to control a robot’s actions in the real world. But Eslami and his colleagues plan to continue developing the GQN with more complex geometry and situations, hoping that one day the fully autonomous robotic understanding of a scene could lend itself to any number of industrial applications. Robots could theoretically be trained on one task and redeployed on another without extensive reprogramming. GQN could bring down manufacturing costs, increase production speed and streamline assembly of just about anything cobbled together by robots. “This work is interesting and exciting,” says Massachusetts Institute of Technology professor of cognitive science and computation, Joshua Tenenbaum, who also says the technology has a way to go before it sees any practical uses. “In my view, this research is still rather far from direct applications,” he notes. “From a strictly practical engineering point of view, the problems it solves can currently be solved as well or better by other means, which are less dependent on pure learning-based methods.”

Tenenbaum, who was not involved with the project, adds, “In the long term this work could help advance the state of robotic perception and control, leading to systems that are more adaptive and autonomous than today's AI technologies.”

As AI advances to the point where machines take on qualities previously exclusive to humans, there are of course dystopian concerns: namely that we will cultivate our own demise at the hands of a smarter, more powerful population of cyber beings, whatever form they may take. And as German philosopher Thomas Metzinger has cautioned for years, creating certain mental states in machines could result in those machines experiencing pain and suffering.

Tenenbaum is not worried. “Any fear of developing computers that are ‘smarter’ than us, in the practically accessible future, are unfounded,” he says. “The system presented here is a noteworthy advance over previous inverse-graphics systems, but it is far from capturing the perception abilities that even young children possess. It also requires vast quantities of training data, which children do not, suggesting that its learning abilities are not nearly as powerful as those of human beings.”

Computer science founding father Alan Turing once said a computer could only be called intelligent if it could deceive a person into believing it was human. Any true success on Turing’s test would require a machine that exhibits general intelligence—one that can do calculus, tie shoes and cook supper, all the things that humans do—a goal that is still nothing more than a futurist fantasy for now.