Emma Mattes has given up on Siri. No matter how clearly or slowly Mattes speaks, the Apple iPhone’s iconic voice-recognition technology has been no help to the 69-year-old woman from Seminole, Fla. She struggles with spasmodic dysphonia, a rare neurological voice disorder that causes involuntary spasms in the vocal cords, producing shaky and unstable speech. Her car’s Bluetooth voice system does not understand her either.

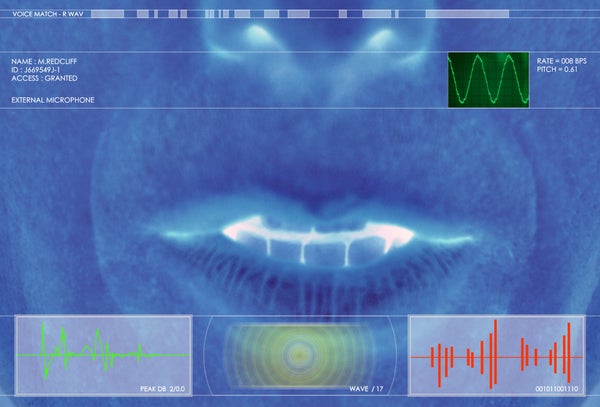

Voice interfaces like Siri have now been sold in millions of products ranging from smartphones and Ford vehicles to smart TVs and the Amazon Echo. These systems promise to let people check the weather, lock their house doors, place a hands-free call while driving, record a TV show and buy the latest Beyoncé album with simple voice commands. They tout freedom from buttons and keyboards and promise nearly endless possibilities.

But the glittering new technology cannot be used by more than nine million people in the U.S. with voice disabilities like Mattes nor by stutterers or those afflicted with cerebral palsy and other disorders. “Speech recognizers are targeted at the vast majority of people at that center point on a bell curve. Everyone else is on the edges,” explained Todd Mozer, CEO of the Silicon Valley–based company Sensory, which has voice-recognition chips in a variety of consumer products like Samsung Galaxy phones and Bluetooth headsets.

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

Worse, help for people like Mattes may be a long way off. Although voice recognition is getting more accurate, experts say it is still not very good at recognizing many atypical voices or speech patterns. Researchers are trying to develop more inclusive voice recognizers, but that technology has serious hurdles to overcome.

People on Mozer’s “edges” include approximately 4 percent of the U.S. population that had trouble using their voices for one week or longer during the past 12 months because of a speech, language or vocal problem, according to the National Institute on Deafness and Other Communication Disorders. Dysarthria, which is slow or slurred speech that can be caused by cerebral palsy, muscular dystrophy, multiple sclerosis, stroke and a variety of other medical conditions, are part of this spectrum of problems. And the trouble extends worldwide. Cerebral palsy, for instance, affects the speech of Mike Hamill, of Invercargill, New Zealand, who was born with the disease and developed swallowing and throat control difficulties in his 30s. As a result, his speech is often strained and erratic.

People who stutter also have trouble using voice-recognition technology, like automated phone menus, because these systems do not recognize their disjointed speech, says Jane Fraser, president of The Stuttering Foundation of America.

There are other problems, such as vocal cord paralysis or vocal cysts, which tend to be less severe and are usually temporary. But these disorders can still reduce accuracy in speech recognition. For example, in a 2011 study that appeared in Biomedical Engineering Online researchers used a conventional automatic speech-recognition system to compare the accuracy of normal voices and those with six different vocal disorders. The technology was 100 percent correct at recognizing the speech of normal subjects but accuracy varied between 56 and 82.5 percent for patients with different types of voice ailments.

For individuals with severe speech disorders like dysarthria, this technology’s word-recognition rates can be between 26.2 percent and 81.8 percent lower than for the general population, according to research published in Speech Communication by Frank Rudzicz, a computer scientist at the Toronto Rehabilitation Institute and assistant professor at the University of Toronto. “There’s a lot of variation among people with these disorders, so it’s hard to narrow down one model that would work for all of them,” Rudzicz says.

This vocal variation is exactly why systems like Siri and Bluetooth have such a hard time understanding people with speech and voice disorders. Around 2012 companies started using neural networks to power voice-recognition products. Neural networks learn from a variety of speech samples and predictable patterns. Intelligent personal assistants like Siri and Google Now were not that robust when they first came out in 2011 and 2012, respectively. But they got better as they acquired more data from many different speakers, Mozer says. Now, these systems can do a lot more. Many companies boast an 8 percent or less word error rate, says Shawn DuBravac, chief economist and senior director of research at the Consumer Technology Association.

Amazon Echo, which became widely available in June 2015, has a voice recognizer called Alexa that is targeted to perform specific functions such as fetching news from local radio stations, accessing music streaming services and ordering merchandise on Amazon. The device also has voice controls for alarms and timers as well as shopping and to-do lists. Over time Amazon has been adding more functions.

But the nature of speech and vocal disabilities is that they produce random and unpredictable voices, and voice-recognition systems cannot identify patterns to train on. Apple and Amazon declined to address this problem directly when asked to comment, but said via email that, in general, they intend to improve their technology. Microsoft, which developed the speech-recognition personal assistant Cortana, said via a spokesperson that the company strives to be “intentionally inclusive of everyone from the beginning” when designing and building products and services.

To find solutions, companies and researchers have looked to lip-reading, which has been used by some deaf and hard of hearing people for years. Lip-reading technology could provide additional data to make voice recognizers more accurate, but these systems are still in their early stages. At the University of East Anglia in England, computer scientist Richard Harvey and his colleagues are working on lip-reading technology that spells out speech when voice recognition is not enough to determine what a person is saying. “Lip-reading alone will not make you able to deal with speech disability any better. But it helps because you get more information,” Harvey says.

Some products and systems might be more amenable to learning unusual voices, researchers say. A bank’s voice-automated customer service phone system or a car’s hands-free phone system have limited vocabularies—so hypothetically, Harvey says it would be easier to build a set of algorithms that recognize different versions and pronunciations for a fixed set of words. But these systems still use some unique words like the user’s name, which have to be learned.

Another possibility is that devices could have the ability to ask clarifying questions to users when their voice-recognition systems do not immediately understand them, DuBravac says.

Better-designed neural networks could eventually be part of the solution for people with speech disabilities—it is just a matter of having enough data. “The more data that becomes available, the better this technology is going to get,” Mozer says. That is starting to happen already with different languages and accented speech. According to Apple, Siri has so far learned 39 languages and language variants.

But as this technology in its current state becomes more embedded in our daily lives, researchers such as Rudzicz warn that multitudes of people with speech and vocal problems will be excluded from connected “smart” homes with voice-activated security systems, light switches and thermostats, and they might not be able to use driverless cars. “These individuals need to be able to participate in our modern society,” he says. So far, attempts by tech companies to include them are little more than talk.