If you enjoy computerized personality tests, you might consider visiting Apply Magic Sauce (https://applymagicsauce.com). The Web site prompts you to enter some text you have written—such as e-mails or blogs—along with information about your activities on social media. You do not have to provide social media data, but if you want to do it, you either allow Apply Magic Sauce to access your Facebook and Twitter accounts or follow directions for uploading selected data from those sources, such as your history of pressing Facebook’s “like” buttons. Once you click “Make Prediction,” you will see a detailed psychogram, or personality profile, that includes your presumed age and sex, whether you are anxious or easily stressed, how quickly you give in to impulses, and whether you are politically and socially conservative or liberal.

Examining the psychological profile that the algorithm derives from your online traces can certainly be entertaining. On the other hand, the algorithm’s ability to draw inferences about us illustrates how easy it is for anyone who tracks our digital activities to gain insight into our personalities—and potentially invade our privacy. What is more, psychological inferences about us might be exploited to manipulate, say, what we buy or how we vote.

Surprising Accuracy

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

It seems that our like clicks by themselves can be pretty good indicators of what makes us tick. In 2015 David Stillwell and Youyou Wu, both at the University of Cambridge, and Michal Kosinski of Stanford University demonstrated that algorithms can evaluate what psychologists call the Big Five dimensions of personality quite accurately just by examining a Facebook user’s likes. These dimensions—openness to experience, conscientiousness, extroversion, agreeableness and neuroticism—are viewed as representing the basic dimensions of personality. The degree to which they are present in individuals describes who those people are.

The researchers trained their algorithm using data from more than 70,000 Facebook users. All the participants had earlier filled out a personality questionnaire, and so their Big Five profile was known. The computer then went through the Facebook accounts of these test subjects looking for likes that are often associated with certain personality characteristics. For example, extroverted users often give a thumbs-up to activities such as “partying” or “dancing.” Users who are especially open may like Spanish painter Salvador Dalí.

Then the investigators had the program examine the likes of other Facebook users. If the software had as few as 10 for analysis, it was able to evaluate that person about as well as a co-worker did. Given 70 likes, the algorithm was about as accurate as a friend. With 300, it was more successful than the person’s spouse. Even more astonishing to the researchers, feeding likes into their program enabled them to predict whether someone suffered from depression or took drugs and even to infer what the individual studied in school.

The project grew out of work that Stillwell began in 2007, when he created a Facebook app that enabled users to fill out a personality questionnaire and get feedback in exchange for allowing investigators to use the data for research. Six million people participated until the app was shut down in 2012, and about 40 percent gave permission for the researchers to obtain access to their past Facebook activities—including their history of likes.

Researchers around the world became very interested in the data set, parts of which were made available in anonymized form for noncommercial research. More than 50 articles and doctoral dissertations have been based on it, in part because the Facebook data reveal what people actually do when they are unaware that their behavior is the subject of research.

Commercial Applications

One obvious use for such psychological insights beyond the realm of research is in advertising, as Sandra C. Matz of Columbia University and her colleagues (among them Stillwell and Kosinski) demonstrated in a 2017 paper. The team made use of something that Facebook offers to its business customers: the ability to target advertisements to people with particular likes. They developed 10 different ads for the same cosmetic product, some meant to appeal to extroverted women and some to introverts. One of the “extrovert” ads, for example, showed a woman dancing with abandon at a disco; underneath it the slogan read, “Dance like no one’s watching (but they totally are).” An “introvert” ad showed a young woman applying makeup in front of a mirror. The slogan said, “Beauty doesn’t have to shout.”

Both campaigns ran on Facebook for a week and together reached about three million female Facebook users, who received messages that were matched to their personality type or to the opposite of their type. When the ads fit the personality, Facebook viewers were about 50 percent more likely to buy the product than when the ads did not fit.

Ad Aimed to Extroverts

Ad Aimed to Introverts

“Dance like no one’s watching (but they totally are).”

“Beauty doesn’t have to shout.”

Two different advertising campaigns for a cosmetic were designed to appeal either to extroverts or introverts and were displayed to female Facebook users in a 2017 study. Sales were highest when ads fit the women’s personality. The ads here were among 10 that were used. Credit: Paul Bradbury Getty Images (left); Getty Images

Advertisers often pursue a different approach: they look for customers who have bought or likeda particular product in the past to ensure that they target people who are already well disposed to their wares. In limiting a target group, it makes sense to take previous consumption into account, Matz says, but this study demonstrated the power of adapting how the message is communicated to a consumer’s personality.

It is a power not lost on marketers. Numerous companies have discovered automated personality analysis and turned it into a business model, boasting about the value it can provide to their customers—although how well the methods used by any individual company actually work is hard to judge.

The now defunct Cambridge Analytica offers an infamous example of how personality profiling based on Facebook data has been applied in the real world. In March 2018 news reports alleged that as early as 2014, the company had begun buying personal Facebook data about more than 80 million users. (Stillwell’s group makes a point of emphasizing that Cambridge Analytica had no access to its data, algorithms or expertise.) The company claimed to specialize in personalized election advertising: the packaging and pinpoint targeting of political messages. In 2016 Alexander Nix, then the company’s CEO, described Cambridge Analytica’s strategy in a presentation in New York City, providing an example of how to convince people who care about gun rights to support a selected candidate. (See a YouTube video of his talk at www.youtube.com/watch?v=n8Dd5aVXLCc.) For voters deemed neurotic (who are prone to worrying), Nix proposed an emotionally based campaign featuring the threat of a burglary and the protective value of a gun. For agreeable people (who value community and family), on the other hand, the approach could feature fathers teaching their sons to hunt.

Cambridge Analytica worked for the presidential campaigns of Ted Cruz and Donald Trump. Nix claimed in his talk that the strategy helped Cruz advance in the primaries, and the company later took some credit for Trump’s victory—although exactly what it did for the Trump campaign and how valuable its work was are in dispute.

Philosopher Philipp Hübl, who, among other things, examines the power of the unconscious, is dubious of the Trump claim. He notes that selling cosmetics costing a few dollars, as in Matz’s study, is very different from swaying voters in an election campaign. “In elections, even undecided voters weigh the possibilities, and it takes more than a few banner ads and fake news to convince them,” Hübl says.

Matz, too, sees limits in what psychological marketing in its current stage of development can accomplish in political campaigns. “Undecided voters in particular may be made more receptive to one or another position,” she says, “but turning a Clintonista into a MAGA voter, well, that was pretty unlikely to happen.” Nevertheless, Matz thinks that such marketing is likely to have some effect on voters, calling the notion that it has no effect “extremely improbable.”

Beyond Facebook

Facebook activity is by no means the only data that can be used to assess your personality. In a 2018 study, computer scientist Sabrina Hoppe of the University of Stuttgart in Germany and her colleagues fitted students with eye trackers. The volunteers then walked around campus and went shopping. Based on their eye movements, the researchers were able to predict four of the Big Five dimensions correctly.

How we speak—our individual tone of voice—may also divulge clues about our personality. Precire Technologies, a company based in Aachen, Germany, specializes in analyzing spoken and written language. It has developed an automated job interview: job seekers speak with a computer by telephone, which then creates a detailed psychogram based on their responses. Among other things, Precire analyzes word selection and certain word combinations, sentence structures, dialectal influences, errors, filler words, pronunciations and intonations. Its algorithm is based on data from more than 5,000 interviews with individuals whose personalities were analyzed.

Precire’s clients include German company Fraport, which manages the Frankfurt Airport, and the international recruitment agency Randstad, which uses the software as a component of its selection process. Andreas Bolder, head of personnel at Randstad’s German branch, says the approach is more efficient and less costly than certain more time-consuming tests.

Software that analyzes faces for clues to mood, personality or other psychological features is being explored as well. It highlights both what is possible and what to fear.

Possibilities and Problems

In early 2018 four programmers at a hacker conference, nwHacks, introduced an app that discerns mood by analyzing face-tracking data captured from the front camera of the iPhone X. The app, called Loki, recognizes emotions such as happiness, sadness, anger and surprise in real time as someone looks at a news feed, and it delivers content based on the person’s emotional state. In an article about Loki, one of the developers said that he and his colleagues created the app to “illustrate the plausibility of social media platforms tracking user emotions to manipulate the content that gets shown to them.” For instance, when a user engages with a news feed or other app, such software could secretly track the person’s emotions and use this “emotion detector” as a guide for targeting advertising. Studies have shown that people tend to loosen their purse strings when they are in a good mood;advertisers might want to push ads to your phone when you are feeling particularly up.

Astonishingly, Loki took just 24 hours to build. In making it, the developers relied on machine learning, a common approach to automated image recognition. They first trained the program with about 100 facial expressions, labeling the emotions that corresponded to each expression. This training enabled the app to “figure out” how facial expression relates to mood, such as, presumably, that the corners of the mouth rise when we smile.

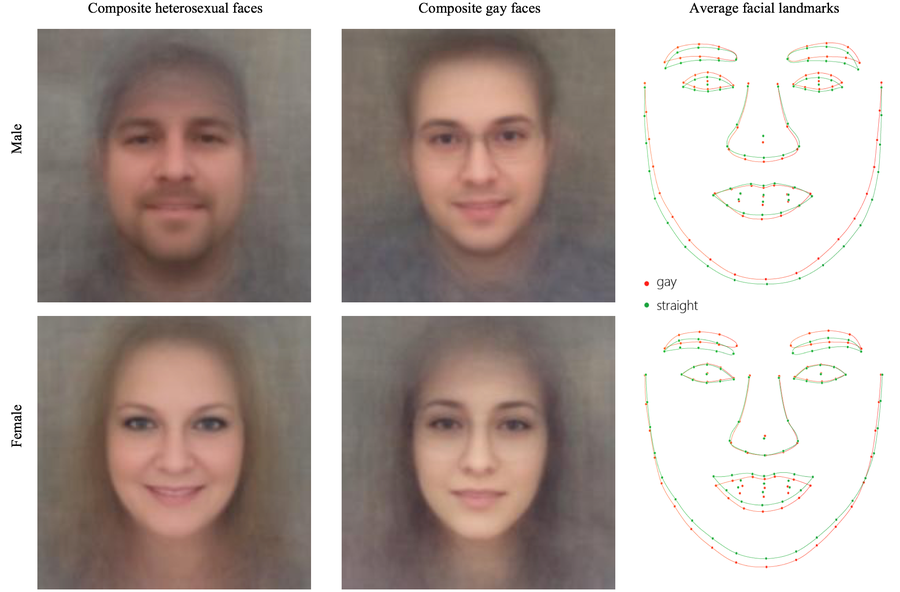

Kosinski, too, has examined whether automated image-recognition technology can surreptitiously discern psychological traits from digital activity. In an experiment published in 2018, he and his Stanford colleague Yilun Wang fed hundreds of thousands of photographs from a dating portal into a computer, along with information on whether the person in question was gay or straight. They then presented the software with pairs of unknown faces: one of a homosexual person and another of a heterosexual individual of the same sex. The program correctly distinguished the sexual orientation of men 81 percent of the time and of women 71 percent of the time; human beings were much less accurate in their assessments.

Given that gay people continue to fear for their lives in many parts of the world, it is perhaps not surprising that the results elicited negative reactions. Indeed, Kosinski got death threats. “People didn’t understand that my intention wasn’t to show how cool it is to predict sexual orientation,” Kosinski says. “The whole paper is actually a warning, a call for increasing privacy.”

By analyzing 83 measuring points on faces, an algorithm correctly identified the sexual orientation of many men based on their photograph in a dating portal. In addition, the program generated supposedly “archetypal straight” (left) and “archetypal gay” (center) faces and calculated how the facial expressions differed on average (right). The researchers say they conducted the study partly to warn that photographs posted on the Internet could be mined for private data. Credit: Yilun Wang and Michal Kosinski; Source: “Deep Neural Networks Are More Accurate Than Humans at Detecting Sexual Orientation from Facial Images,” by Michal Kosinski and Yilun Wang, in Journal of Personality and Social Psychology, Vol. 114, No. 2; February 2018.

In late 2016 computer scientists at the Swiss Federal Institute of Technology Zurich demonstrated that the personalities of Facebook users can be pinned down more precisely if their likes are coupled with analyses of their profile photograph. Interestingly, the researchers, like many others who use machine-learning software, do not know exactly how the algorithm forms its judgment—for example, whether it relies on such features as a person’s haircut or the formality of the individual’s dress. They are in the dark because machine-learning programs do not reveal the rules they apply in drawing conclusions. The investigators know that the software finds correlations between features in the data and personality but not exactly how it concludes that a man in a photograph is attracted to other men or which characteristics in my e-mail might indicate that I am conscientious and somewhat introverted.

“The image we are often given is that predicting personality is a kind of magic,” says Rasmus Rothe, who was involved in the Swiss study. “But in the final analysis, computer models do nothing other than find correlations.”

The use of facial-recognition technology for analyzing psychology is not merely an object of research. It has been adopted by several commercial enterprises. Israeli company Faception, for example, says it can recognize whether a person has a high IQ or pedophilic tendencies or is a potential terrorist threat.

Even if a correlation is found with a trait, experts have their doubts about the usefulness of such analyses. “All that the algorithms give us are statistical probabilities,” Rothe says. It simply is not possible to identify with certainty whether a person is Mensa material. “What the program can tell us is that someone who looks sort of like you is statistically more likely to have a high IQ. It could easily guess wrong four times out of 10.”

With some applications, incorrect predictions are tolerable. Who cares if Apply Magic Sauce comes to comically erroneous conclusions? But the effect can be devastating in other circumstances. Notably, when the characteristic being analyzed is uncommon, more errors are likely to be made. Even if a company’s computer algorithms were to finger terrorists correctly 99 percent of the time, the false positives found 1 percent of the time could bring harm to thousands of innocent people in populous places where terrorists are rare, such as in Germany or the U.S.

Language Recognition and Suicide Prevention

Of course, automatic psychological assessments can be used to help people live better. Suicide-prevention efforts are emblematic. Facebook has such an initiative. The company had noticed that users on its platform occasionally announce there that they intend to kill themselves. Some have even live streamed their death. An automatic language-processing algorithm is now programmed to report suicide threats to the social network’s contact checkers. If a trained reviewer determines that a person is at risk, the person is shown support options.

Twitter posts might likewise be worth analyzing, according to Glen Coppersmith, a researcher at Qntfy, a company based in Arlington, Va., that combines data science and psychology to creates technologies for public health. Coppersmith has noted that Twitter messages sometimes contain strong evidence of suicide risk and has argued that their use for screening should be seriously considered.

Taking a different tack, University Hospital Carl Gustav Carus in Dresden is using smartphones to measure behavioral changes, looking for those characteristic of severe depression. In particular, it is attempting to determine when patients with a bipolar affective disorder are in a manic or depressive phase (see “Smartphone Analysis: Crash Prevention”).

Even designers of algorithms that are created with good intentions must balance the potential for good against the risk of privacy invasion. Samaritans, a nonprofit organization that aims to help people at risk of suicide in the U.K. and Ireland, found this out the hard way a few years ago. In 2014 it introduced an app that scanned Twitter messages for evidence of emotional distress (for example, “tired of being alone” or “hate myself”), enabling Twitter users to learn whether friends or loved ones were undergoing an emotional emergency. But Samaritans did not obtain the consent of the people whose Tweets were being collected. Criticism of the app was overwhelming. Nine days after the program started, Samaritans shut it down. The Dresden hospital has not made the same mistake: it obtains permission from participants before it monitors their smartphone use.

Automated psychological assessments are becoming a part of the digital landscape. Whether they will ultimately be used mainly for good or ill remains to be seen.

Smartphone Analysis: Crash Prevention

If Jan Smith (a pseudonym) were to spend the morning in bed and miss a class, his absence would definitely sound an alarm. This is because the 25-year-old student has a virtual companion that is pretty well informed about the details of his daily life—when he goes for a walk and where, how often he calls his friends, how long he stays on the phone, and so on. It knows that he sent four WhatsApp messages and two e-mails late last night, one of which contained more than 2,000 keystrokes.

Smith suffers from bipolar disorder, a mental illness in which mood and behavior constantly swing between two extremes. Some weeks he feels so depressed that he can hardly get out of bed or manage the basic tasks of everyday life. Then there are phases during which he is so euphoric and full of energy that he completes projects without seeming to need sleep.

Smith installed a program on his smartphone that records all his activities, including not only phone calls but also his GPS and pedometer readings and when he uses which apps. This information transfers to a server at regular intervals. Smith is taking part in a study coordinated by University Hospital Carl Gustav Carus in Dresden. The goal of the project, known as Bipolife, is to improve the diagnosis and treatment of bipolar disorders. Researchers intend to monitor the smartphones of 180 patients for two years.

They plan to collect moment-to-moment information about each participant’s mental state. Such data should be useful because bipolar patients are often unaware when they are about to have a depressive or manic episode. That was certainly Smith’s experience: “When I was on a high, I threw myself into my work, slept maybe three or four hours, and wrote e-mails to professors at three in the morning. It never occurred to me that this might not be normal. Everyone I knew envied my energy and commitment.”

The smartphone app is meant to send up warning flares. “The transferred data are analyzed by a computer algorithm,” explains Esther Mühlbauer, a psychologist at the Dresden hospital. For example, it recognizes when a participant makes significantly fewer phone calls or suddenly stops leaving the house—or works around the clock, neglecting sleep. “If our program sees that, it automatically e-mails the patient’s psychiatrist,” Mühlbauer says. Then the psychiatrist gets in touch with the patient.

The researchers first have to get a baseline, determining, for example, how particular patients use their cell phones during asymptomatic phases. Then the software notes when the behavior deviates from a patient’s norm so that treatment can be given quickly. Smith finds this monitoring very reassuring: “It means that there is always someone there who looks after my condition,” he says. “This can be a significant support, especially for people who live alone.”—F.L.