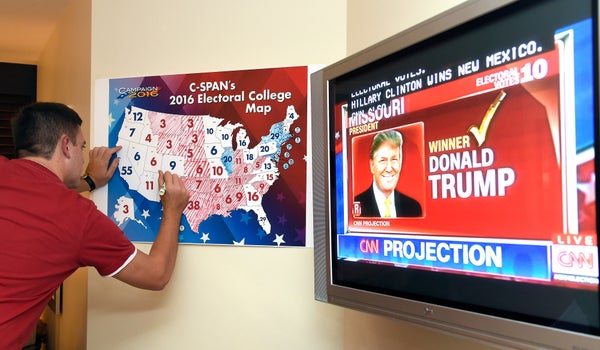

After months of predictions pointed toward a victory for Hillary Clinton, the U.S. presidential election took a dizzying detour from expectations on Tuesday night, and Donald Trump will be heading to the Oval Office. Trump racked up one swing state after another, leaving even the best poll aggregators dumbfounded. The idea behind these polls is simple: The averaged outcomes of multiple polls will be more accurate than any one survey alone. But this elegant approach breaks down in practice, as the election results made clear. Although there was some variation among the aggregators about Hillary Clinton’s chances for victory (Nate Silver of FiveThirtyEight consistently produced the most conservative estimates whereas the Huffington Post and the Princeton Election Consortium consistently produced the highest chances), the major polling organizations overwhelmingly predicted that she would win—and they got it terribly wrong.

The reason for this is twofold: First, many initial pollsters are failing to produce high-quality polls. Second, aggregators are using imperfect statistical models to average results. In this cycle most experts think the highest blame lies with the first factor—the polls themselves.

Cliff Zukin, a political scientist at Rutgers University, says election polling is in crisis. As more people use cell phones instead of landlines (the latter of which make canvassing easier) and fewer respond to surveys, polling has grown increasingly expensive. Thus pollsters are working in a changing landscape: They’re fine-tuning their efforts to reach mobile phones, turning to online surveys and using statistical tools to correct for biases. But the accuracy of these new methods is largely untested, and there were simply fewer high-quality polls available to aggregate in this election season. “Our dominant paradigm has broken down,” Zukin says. “The aggregators have less good raw material to work with now than they did four years ago and eight years ago.” As a result, polling has become less scientific. Or in Zukin’s words: “It is an art-based science.”

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

But the biggest confounding factor in the polls, according to Zukin, is that survey respondents overstate their likelihood of voting. It is not uncommon, he says, for 80 percent of the respondents to state that they are definitely going to vote, when previous elections show turnout at around 60 percent. “So you need to go through the exercise of figuring out which of those 80 percent are really going to be the 60 percent who vote,” Zukin says. Although pollsters construct “likely voter” scales from respondents’ answers to various questions, research does not indicate which questions work best for this purpose. “There's not a magic bullet formula for that,” Zukin says. “And so you guess.” And if they guess wrong, the effects can be drastic—what happened Tuesday night is exhibit A. Although the exact numbers take time to process, there seems to be a growing consensus that turnout might have affected this year’s polls. “I think that when it comes to Donald Trump, the interpretation here is probably that pollsters did what they should do scientifically, which is to say make our best prediction based on last time,” says Josh Pasek, assistant professor of communication studies at the University of Michigan. “But the parameters on the ground were fundamentally different in that the kinds of people who voted this time were not a mirror of who voted last time. … Models underestimated the likelihood of turnout that poured from the relatively less-educated (who typically vote less often) white Republicans.”

At the end of the day every poll contains an unknown bias, whether it results from poor-quality data or underestimated turnout. So aggregators do their best to use the hands they are dealt—by building statistical models to better analyze the cards in those hands. Although these models vary in complexity, the simplest one is the so-called “poll of polls” approach, which simply averages the outcomes of all the polls under the assumption their respective biases will at least partially wash out. But some cause permanent stains. In October The New York Times discovered that one 19-year-old man in Illinois was shifting poll aggregators toward Trump. The man, who was interviewed by the U.S.C. Dornsife/Los Angeles Times “Daybreak” Poll, was weighted up to 300 times more than the least-weighted respondent. As a result, most aggregators dropped this poll from their list or gave it less weight. In retrospect, the poll is now known to have been the most accurate and will cause aggregators to take a second look at the metrics they use to determine which polls are reliable.

But even if aggregators use the same initial polls, they might not predict the same outcome. The New York Times published a study in September that involved giving four different research teams the same raw data, and asked them to predict the outcome of the election. After scrutinizing the data carefully all four teams came up with different results—and not just different margins of victory, but different winners. “There are so many choices in building these models that it is an art in a lot of ways,” says Frederick Conrad, head of the Program in Survey Methodology at the University of Michigan. “It all becomes mathematical because it’s implemented in a model. But somehow intuitions are quantified.”

Once the raw data has been incorporated into the model, the second-largest variable for aggregators to consider is the statistical model itself. Most aggregators use a hybrid approach in which they incorporate certain fundamentals—such as economic trends or a widespread desire to switch leadership to another party—into their models, along with the poll results. But aggregators differ as to how much weight their predictions should give to these fundamentals. Tuesday’s election strongly reinforced the idea that such fundamentals are actually in the driver’s seat, Pasek says. “While you might think it’s about the candidates—the things they say, the things they believe,” he explains “In the end, those fundamentals tend to take over. Trump might have been elected simply because we’ve had a Democrat living in the White House for the past eight years.

So in the aftermath of poll failures in this election, was there any big aggregator that looked much better than the others? It’s a tough call, given that they all had similar state-by-state estimates. But the fact that FiveThirtyEight was more cautious in its estimate of a Clinton victory should be highlighted. “It’s accurate for a funny reason—not because they really understood what was going on, but that they recognized that there was this potential for large-scale shifts to change the outcome in ways that we weren’t detecting,” Pasek says. One of the largest differences between FiveThirtyEight and the Huffington Post, for example, is how much they trust that certain states will move together. The Huffington Post does not assume the polls in one state will strongly affect those in another state, says Natalie Jackson, the Huffington Post’s senior polling editor. But FiveThirtyEight does. “So that means that we have a much lower probability of systematic error blowing up our entire map,” Jackson says

Although many political scientists were surprised by Tuesday’s results, they do not think it is time to give up on polls. “I think that if anything, this election suggested that the polls aren’t completely unreliable,” Pasek says. Most polls were reasonably accurate in predicting how the states would go—with the exception of Trump’s far better-than-expected performance in the upper Midwest. “In general, I think the polls gave a good barometer, but there were a couple sources of systematic error that seemed to push everybody off a little bit,” Pasek says. In order to better determine those errors, there will be a long period of self-examination to come. “It's safe to say the election polling industry has some work to do,” Jackson says. “But I'm very optimistic that we'll do that work and emerge a better, stronger field for it.” It is a thought that resounds across the business. “We'll come out of this election with a much firmer understanding of what worked and what didn't, once we see who did well and who did not,” Zukin says. “But right now we're just out there experimenting. It's the wild, wild West for us.”