One of mathematical biologist Lewi Stone’s typical papers bears the title: “The Feasibility and Stability of Large Complex Biological Networks: A Random Matrix Approach.” But the academic, who holds appointments at Tel Aviv University and RMIT University in Melbourne recently went in for a change of pace: This month Science Advances published his study titled “Quantifying the Holocaust: Hyperintense Kill Rates During the Nazi Genocide.”

Stone’s analysis of deportation train records indicates about a quarter of Holocaust deaths was concentrated in a single period, from August through October 1942, at three camps in Poland. And the largest Holocaust murder campaign only abated because so few Jews were left in German-occupied Poland. Stone’s estimates of “kill rates” provide insight into the industrial methods the Nazis brought to bear. Scientific American spoke with Stone about his new paper.

[An edited transcript of the interview follows.]

On supporting science journalism

If you're enjoying this article, consider supporting our award-winning journalism by subscribing. By purchasing a subscription you are helping to ensure the future of impactful stories about the discoveries and ideas shaping our world today.

You’re a mathematical biologist. Why did you decide to study the operations of a Nazi death camp?

When I was a high school student in the ‘70s, before the internet, I found myself hanging around the local library in the Melbourne suburbs. This particular library had a set of shelves in the corner of the building, one of which was dedicated to books on the Holocaust, right by the maths books I was glued to.

Because of my interest in science and maths, one of these books fascinated me. In an appendix at the end of the book there were many carefully prepared charts and statistical tables with estimates of victims killed. The author had obviously gone to a huge amount of trouble to publish this data, but I never understood the purpose of these tables and figures. How did it give insights into the Holocaust? I promised myself that one day I would return to this book, and these data sets, and try to seek out patterns or something useful.

I went on to pursue a career in mathematical biology. Every five to 10 years I tried to search for that original Holocaust book, but never succeeded in finding it again. Meanwhile, for decades I had been working on the modeling of infectious diseases as they spread through contemporary populations during events like the deadly Spanish flu pandemic of 1918.

When a rare data set describing disease outbreaks in the Holocaust reached my hands, I became interested again in World War II. Soon I discovered the remarkable data of the Israeli historian Yitzhak Arad on railway transportation to the death camps—and, by proxy, deaths during Operation Reinhard, the largest single murder campaign within the Holocaust. I understood immediately that much could be learned about the Holocaust from this data set.

Briefly, what did you find?

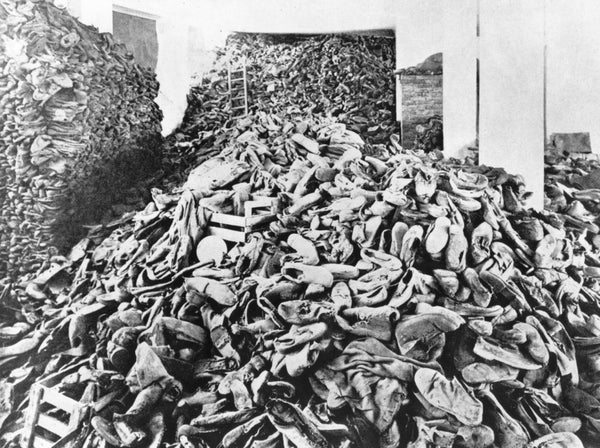

My work investigates a period in 1942, referred to as Operation Reinhard, when the Nazis efficiently shuttled about 1.7 million victims—often whole Jewish communities—across the European railway network in train carriages to Treblinka, Belzec and Sobibor. Almost all of those who arrived at these death camps were murdered, usually within hours in the gas chambers. Because the Nazis destroyed nearly all records of the massacre, it is important to try to uncover what actually happened at the time.

My study looks at the “kill rate,” or murders per day. My graph of the kill rate reveals a sudden massive slaughter after Hitler “ordered all action speeded up,” as one SS officer put it, on July 23, 1942. Approximately 1.5 million Jews were murdered in only 100 days, including shootings outside the death camps. On average, 450,000 victims were killed each month during August, September and October of that year. That’s approximately 15,000 murders every day.

The slaughter then soon terminated, as there were hardly any Jews remaining in the area to kill. The full scope of this genocidal slaughter and hyperintense killing appears to be documented in history in the vaguest of terms. Available information before this study was mostly reconstructed indirectly, partially conjectured and usually given on an annual timescale rather than daily or monthly. That meant missing the intensity of this three-month slaughter. But Arad’s data allowed me to characterize it far better than other attempts, because the data was given on a daily timescale.

While Operation Reinhard is considered the largest single murder campaign of the Holocaust, the extraordinary speed at which it operated to obliterate the Jewish people has been poorly estimated in the past and almost completely unknown to the general public. The minimal time in which the operation took place indicates the enormous coordination involved by a state machinery responsive to the Fuhrer’s murderous will to eradicate a people. The train records show how zones were emptied of Jewish communities one by one in an organized manner, and how intense kill rates were achieved in targeted areas that only slowed as victims ran out. My plots of the data and a film visualization highlight the pace and frenzy of this mass murder.

The atrocities of the camps are a well-established fact. What did you find in your study that surprised you?

It was the sheer scale of the atrocities that surprised me. The graphs show with chilling immediacy the huge sudden increase in killings in 1942 in Arad’s data set. At the Treblinka death camp, for example, it was not uncommon that two train transportations arrived in a day, bringing in 10,000 victims who were murdered in the gas chambers within hours of arrival. This high volume of killing could at times happen day in, day out.

As Raul Hilberg, author of The Destruction of the European Jews, remarked, “Never before in history had people been killed on an assembly-line basis.” Visualizing the dynamics in the form of graphs somehow re-creates the large-scale picture far more realistically than the proverbial 1,000 words. My spatiotemporal visualization shows a sudden drop in killings—pretty much when the huge area of German-occupied Poland had very few Jewish communities remaining and there were few victims left to murder. This type of data-visualization communicates in a powerful way this pure targeted genocide.

As a scientist, another particularly surprising aspect of this event was to find that the Bletchley Park code-breaking group in England—and perhaps Alan Turing himself, the British scientist who cracked the Germans’ infamous Enigma machine code used by the military to send encrypted messages—played a role in making available statistical data from Operation Reinhard in an indirect but very important manner. In 2000 a cryptic document referred to as the Höfle telegram was discovered in World War II archives in the U.K. British analysts decoded Höfle’s message, encrypted by the Enigma machine on the 11th of January 1943, but most probably they missed its significance. Thanks to the work of historians Peter Witte and Stephen Tyas in 2000, we now understand the telegram contained in a few brief telegraphic lines the detailed statistics on the total 1942 killings in Operation Reinhard death camps at Treblinka, Sobibor, Belzec and a few others. This is a very unusual story, and more research is needed to verify the accuracy of these statistics.

Undertaking this project must have been an emotional experience for you. Can you describe what you felt when you started to get your results?

Emotions definitely came into play even from the outset. I was reluctant for at least six months to go ahead and digitize Arad’s data to a format that I could analyze on the computer. The data after all represents human lives murdered in an extremely tragic genocidal event, making the data in many ways sacred. But since Arad had already compiled the numbers in great detail in his book, I ultimately decided that it would be a shame not to advance his efforts to the next step.

The data covered the 21-month period of Operation Reinhard, and initially I was not sure what to expect. To see that the great bulk of the massacre occurred in just over three months with something like 450,000 murders per month—about 15,000 per day for 100 days—was a life-changing experience for me. I was very shaken up from the graphs. Upon rereading the standard literature one would not come away with the picture I was seeing in the data. More specialist literature gave vague statements that were often not presented in a rigorously justified format, and none gave the “big picture” with the intense three-month period of slaughter I was seeing.

Why is it important to quantify the Holocaust—or other genocidal events—with statistics such as kill rates, tempo and spatial dynamics, going beyond the numbers for how many actually died?

Lack of data and inability to quantify it leads to uncertainty and misinterpretations. You can see it just in the way that Auschwitz was always the central symbol of the Holocaust. Only in recent years is this being reversed as more details of Operation Reinhard come to light. This is partly because there were very few survivors of the Reinhard death camps to leave us with details. All who entered were murdered. The operation was a tight secret and the Nazis destroyed many records. In contrast, there were relatively more survivors from Auschwitz to help reconstruct better the events that occurred there.

The data encodes what actually happened. If you can decode the data, there may be many things to learn that you might not ever be aware of otherwise. This is the great thing about Arad’s data set. It allows us examine what happened with the train transportations to the death camps on a daily timescale. This is a huge advance as it means we now have the dimension of time to work with. Not having a daily time scale, and usually working at annual or semiannual timescales, seriously prevented historians from reporting the three-month peak in the Holocaust kill rate. It was simply off the radar for historians. With the temporal dynamics in hand it became possible to explicitly characterize the assembly-line dynamics of mass murder of the Nazis’ industrial killing apparatus.

Comprehensive data is thus essential to record what happens. Without it we will end up arriving at completely wrong conclusions. Although my study did not require highly complex statistical analytical techniques, modern data science methods can be very useful for dealing with contaminated data or incomplete data sets. So I think these methods should be applied more to the study of wars. There is now a new push to develop approaches for studying wars, conflicts and mass killing events.

Do your findings reveal anything about the Holocaust in relation to other genocidal events such as the killings in Rwanda?

Genocide scholars often compare rates of recent genocides to the rate at which the Nazi Holocaust occurred, treating the latter as a kind of benchmark for genocide severity. Currently, many social scientists maintain that the Rwandan genocide was the most “intense genocide” of the 20th century, with a sustained period of murders occurring at a rate three to five times the rate of the Holocaust. In my view, these sorts of comparisons have limited usefulness, and clearly have the effect of diminishing the Holocaust’s historical standing. My paper lists just a few of many quotes from comparative genocide authorities who make this argument about Rwanda, including ex-U.S. Ambassador to the United Nations, Samantha Power.

However, my work shows that while the Rwanda massacre killings incurred 8,000 victims per day for a 100-day period, the Holocaust was some 80 percent higher than this rate during a similar 100-day period in Operation Reinhard. As a simple calculation shows, this suggests that the Holocaust kill rate has been underestimated by an order of six to 10 times. This is an unacceptable misrepresentation. So there is a need to be very careful when making comparisons, again pointing to the need to have good data. The details matter.

Is there similar work that still needs to be done on Nazi genocide—and do you plan to pursue it?

I work in mathematical epidemiology. The spread of disease was in fact one of the Germans’ greatest fears, and became a German obsession in the Nazi era. The Jewish people were perversely viewed as plague carriers and spreaders of typhus, and this became a nontrivial contributing reason for the triggering of the Holocaust. For the Warsaw Ghetto in 1941 to 1942, which I have been studying, the recipe for disease spread is simple: “When one concentrates 400,000 wretches in one district, takes everything away from them and gives them nothing, then one creates typhus. In this war typhus is the work of the Germans,” as stated by physicist Ludwik Hirszfeld in Warsaw at the time.

I have some extremely interesting data sets of major typhus outbreaks in the Warsaw Ghetto, and using mathematical modeling I am able to show convincingly that there was an amazing kind of “medical resistance” to the German occupation. The residents of the Warsaw Ghetto—which contained a large proportion of doctors incidentally—put in place public health programs and other responses that were able to bring huge epidemic outbreaks to a grinding halt. You can see this crystal clear in the data and based on modeling; the epidemic just crashed in the middle of winter, which is almost completely unimaginable. Resistance doctors were implementing wide-ranging public health and anti-epidemic programs, and even an underground medical university.

There is more work to be done. I am also interested in following and contributing to modern research drives to set up and investigate large databases of more recent wars and conflicts.